Traditionally, parts and assemblies have been designed based on worst case analysis. Though conservative in nature, it guaranteed 100% interchangeability of parts in an assembly. Of late, engineers have started using statistical tolerancing instead of worst-case tolerancing due to following reasons:

- Drives appropriate behavior to hit the target, rather than meet the specification.

- Need for miniaturization of parts. Tolerances do not scale down when parts are scaled down.

- If we do worst-case tolerancing right, tolerances would be tight. Costs associated with tight worst case tolerances needed to meet functional requirements would be prohibitive and large.

- A must to meet our corporate 10X and Six Sigma goals

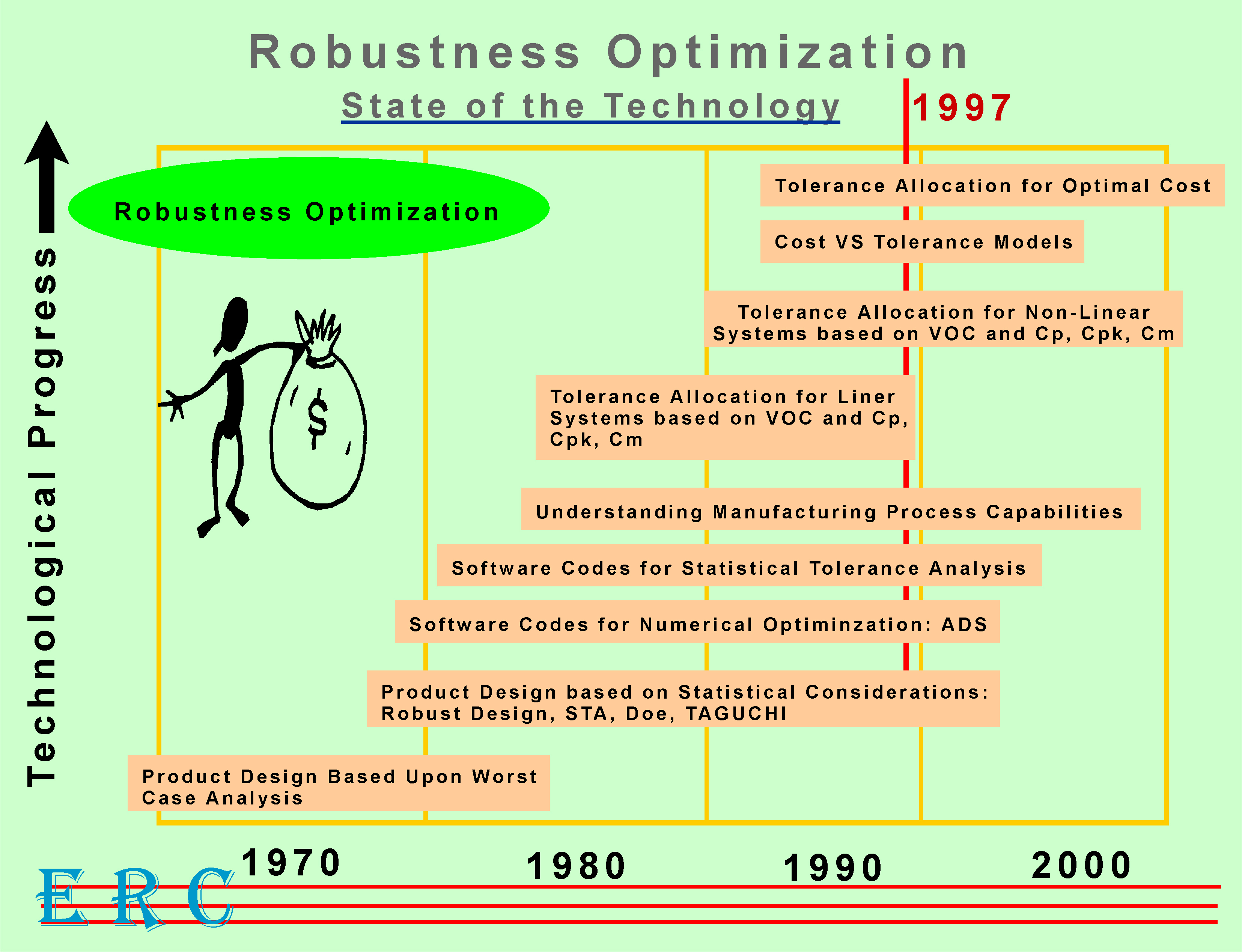

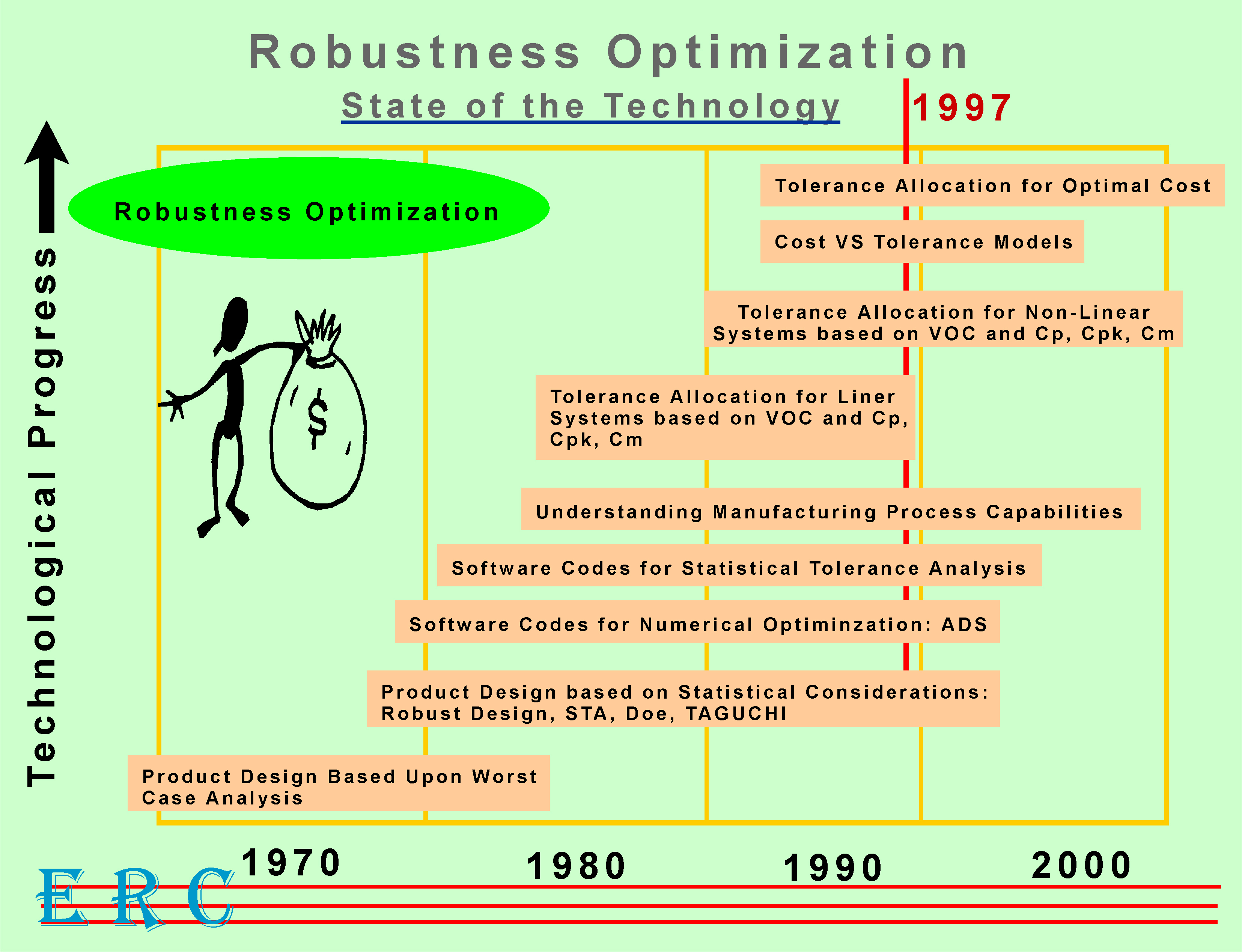

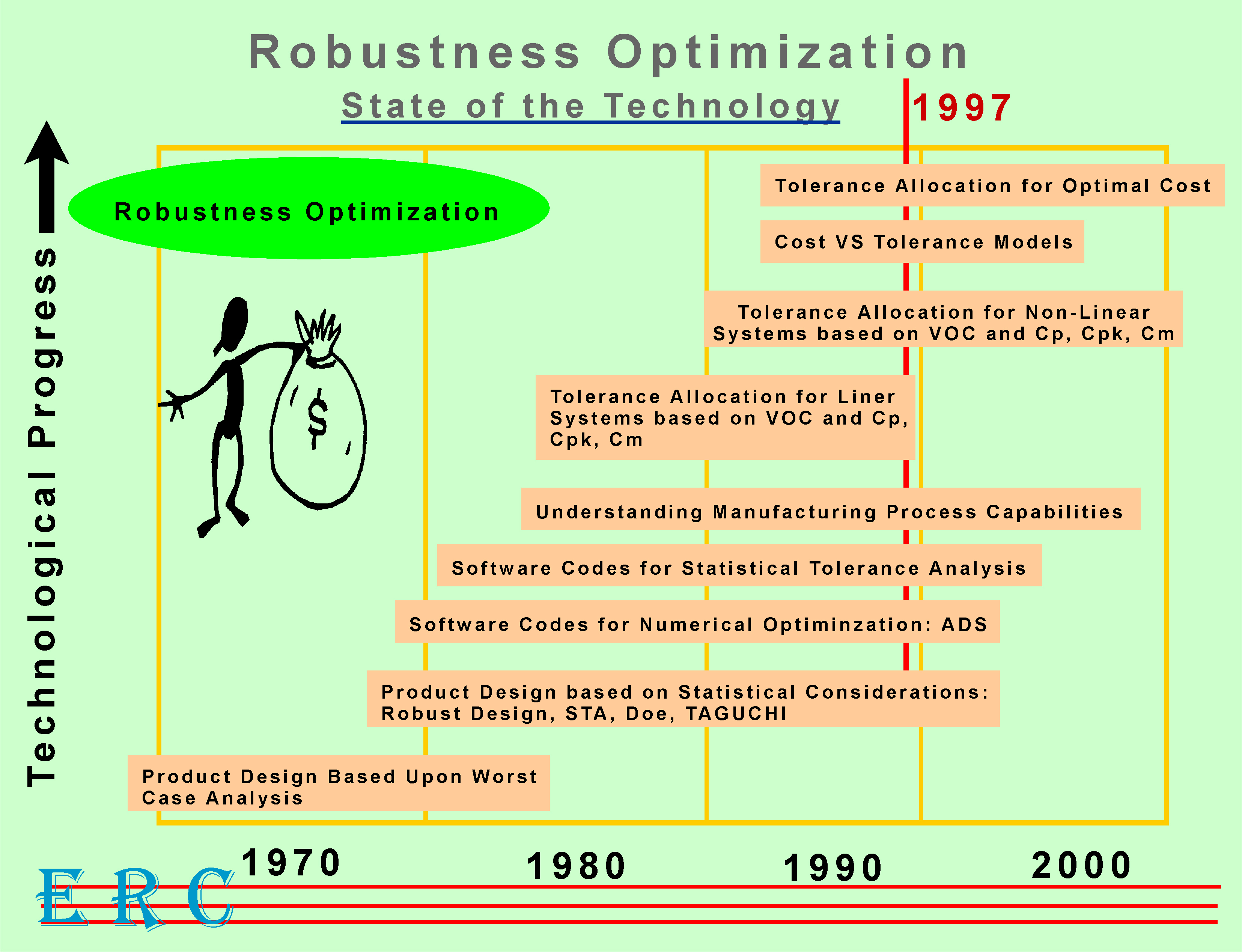

Usable tools for applying statistical tolerancing were developed in the 1980Ăs. However, lack of probabilistic data for manufacturing processes has been one of the reasons that prevented the wide-spread application of Statistical tolerancing. Over the past few years, Significant amount of work is being done to understand and evaluate manufacturing process capabilities and metrics such as Cp, Cpk, Cm etc.

The tolerance allocation methods we have developed in the last two years are applicable for linear systems. We plan to extend these methods to non-linear systems. There is a need to develop models for ˘cost vs. tolerance÷ analysis to make educated and reasonable trade-offs between unit manufacturing cost and quality. In the future, methods that combine ˘cost vs. tolerance÷ data along with tolerance analysis and optimization techniques would enable optimization of a product at a system or a sub-system level for minimum life-cycle cost.